At this point, the argument is no longer about whether artificial intelligence (AI) is coming or not. The reality is, the inevitable shift of AI has already arrived. But when it comes to technological change, we know the biggest challenge is never the technology itself. It is community alignment. Right now, many of our schools are facing a collection of emotional responses. We see teachers who are enthusiastic yet terrified, parents who are anxious and unsure how to navigate this new landscape, and students who are deeply curious but often lack the skills to use these tools to genuinely support their learning. Everyone's perspective and experience vary, and because of this we are often moving in different directions.

Top down AI policies often fail because of the disconnect between administrative intent and classroom reality. A compliance-only approach creates resistance and, worse, resentment among stakeholders. If we only focus on policing, we miss the opportunity to nourish innovation and bring positive change to teaching and learning. To move forward, the healthy, organic path is a community-wide roadmap. This map must be built on shared knowledge, a clear understanding of how AI is used in our specific context, and a consensus on how we support our students both inside and outside the classroom. Aligning this message is critical if we want to lead our community forward in a sustainable way.

Step 1: Laying the Foundation

We want everyone’s voices to be heard, especially when it comes to a shift as important as AI. At this stage, our primary goal is to ensure that all stakeholders feel included. Before we even consider the next specific step, we must revisit our school’s unique context: our core mission, our values, our definition of learning, and our specific competencies.

One way to lead this change is with a diverse group of people forming an AI Steering Committee. This group should represent every corner of our community: teachers, leaders, support staff, and tech leads. Their first job is not to write guidelines. Instead, their role is to frame the entire conversation through the lens of the school’s mission.

The purpose of this phase is to deeply understand the hopes and fears of each group before a single document is drafted. We can run separate listening sessions tailored to each audience: coffee chats or surveys for parents, focus groups for students at different year levels, and discussions during staff or department meetings for teachers.

We need to ask the right questions.

Step 2: Co-Creating the Guidance Document

Once we have listened, we can begin to build. We view the guidance document not as a rulebook, but as a "lighthouse" that guides our ships (students, teachers, parents, and administration) safely through the AI voyage.

The steering committee should take the data gathered in Step 1 to create a draft. This document must address the practicals: data privacy, bias, intellectual honesty, and transparency. It must also tackle academic integrity, or link to an updated academic integrity policy; defining clear use cases, misuse cases, and appropriate citation method. Crucially, it needs to support teachers in handling integrity concerns with care.

This document serves different needs for different groups. For students, it focuses on age-appropriate guidelines, AI literacy, attributions, and academic honesty. For staff, it addresses professional efficiency, curriculum enhancement, and explicit guidelines when AI can be used in assignments. For administrators, it clarifies that their role is to provide the vision, resources, and oversight to ensure our AI integration is safe, effective, and equitable.

But a draft is just a starting point. We must take it back to the community and explicitly invite all stakeholders to contribute. We can host workshops, create working groups, or send feedback forms. The goal is to ensure that when the final document is shared within the community, every stakeholder sees their voice and concerns reflected in it. This process ensures the message aligns across the entire community.

To be enduring, this document should be relatively short, easy to read, and agnostic of specific platforms. Since the AI landscape changes constantly, this should be a reference for turning our principles into best practices. It must be reviewed annually, evolving as our pedagogy evolves regarding using AI in learning and teaching.

Step 3: Identifying the Pathways to Innovation

With our "lighthouse" complete, we can confidently empower innovation through practical steps that bring the roadmap to life. While the steering committee handles the planning, we need to empower champions through an "AI Working Group." This small, supported cohort of teachers serves as our pilots, given the time and resources to explore new methods. Their job is to experiment, fail fast, and bring their findings back to the wider staff.

Alongside these pilots, we must recognize that AI literacy cannot be one-size-fits-all and requires differentiated learning experiences.

Finally, to reduce confusion and risk, we should create a safe ecosystem by maintaining a school-verified list of student-facing and teacher-facing AI tools that meet our ethical and privacy standards. This gives teachers and students a safe "sandbox" to explore. Our pilot groups can test these tools in class, collecting data to see if they truly work for our school context before wider adoption.

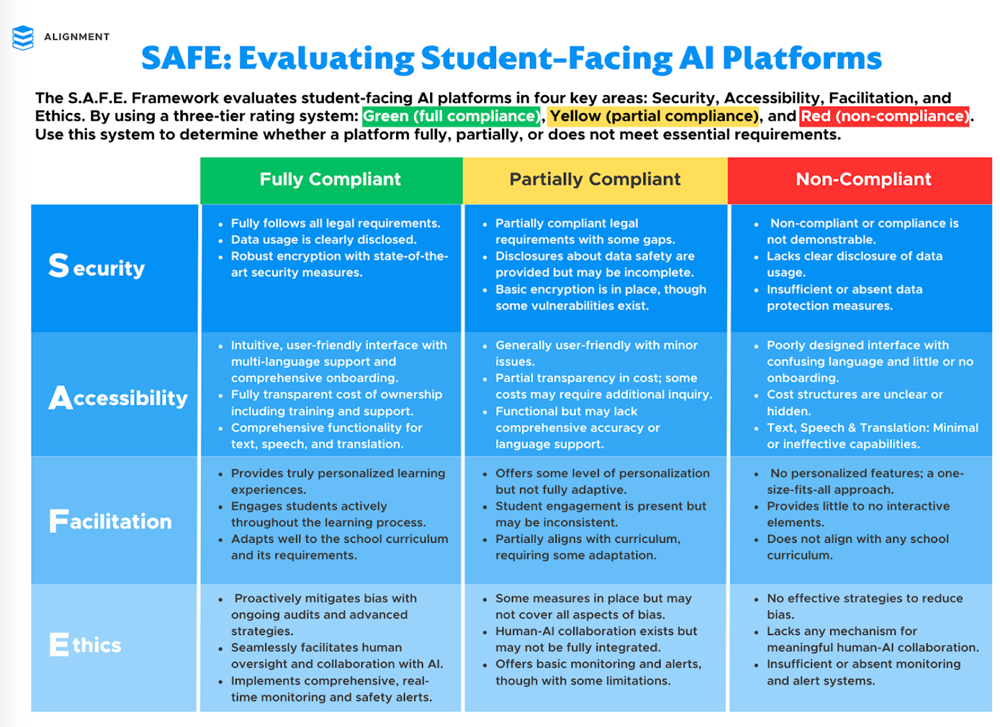

Therefore, we have developed a framework using the acronym S.A.F.E. The S.A.F.E. Framework evaluates student-facing AI platforms in four key areas: Security, Accessibility, Facilitation, and Ethics. By using a three-tier rating system: Green (full compliance), Yellow (partial compliance), and Red (non-compliance). This system can be used to determine whether a platform meets our essential requirements.

Step 4: Making It Stick

An AI roadmap is a living process, not a PDF that gathers dust. After six to 12 months, we should conduct a formal whole-school survey to follow up on our initial listening sessions. We need to ask: How are you using AI? Do you feel supported? Where are the new friction points? If a major change is implemented, surveying before and after allows us to measure the impact vertically and horizontally across the school.

We must then use this data to iterate through an annual review. We should schedule a regular time to revisit the guidance document, the vetted tool list, and the professional development plan. The needs of our staff will change. Some will advance quickly, while others will need foundational support. Our roadmap must be agile enough to meet them where they are. This maintenance builds community trust and ensures our lighthouse is always shining in the right direction.

From Roadmap to Culture

AI is a huge change for everyone, for all stakeholders in the community. Building an AI roadmap is less about technology and more about community leadership and alignment. By starting with listening, collaborating on guidance, and empowering our people to embrace innovation, we move from a place of fear and confusion to one of alignment and opportunity. Our goal is not to create a set of AI Guidelines as fast as possible, our goal is to build a culture of open mindedness and responsible exploration that prepares our students for the future we can not yet predict. To achieve this, a collaborative community roadmap is the first, and most critical step to start with.

Read The Inevitable Shift: AI Has Already Arrived and listen to Cora and Dalton chat about AI with TIE Director Stacy Stephens on the Voice of TIE Podcast: Meeting the Moment: How Educators are Embracing AI With Intention.

Cora Yang is a Learning Tech coach at Chinese International School Hong Kong and the co-founder of Alignment Education. She is an educational innovator who believes that technology should serve, celebrate, and elevate the human experience of learning. At her core, she is an educator, driven by a deep commitment to the growth and wellbeing of every student. In her roles, she puts this philosophy into practice. Cora partners with school leadership teams and classroom educators to demystify emerging technologies, particularly Artificial Intelligence. She excels at translating the complexities of AI into practical, actionable strategies that can be implemented school-wide. Her passion and mission are to ensure that innovation is always guided by pedagogy, creating accessible and powerful learning experiences that prepare students for the future with both wisdom and heart.

LinkedIn: https://www.linkedin.com/in/cora-yang/

Dalton Flanagan is the Learning Innovation coach and Innovation Lab coordinator at Chinese International School Hong Kong. In this role, he focuses on bridging the gap between established pedagogy and the future of technology. He is a leader in educational innovation with over a decade of experience spanning Primary Years Programme (PYP), Middle Years Programme (MYP), International General Certificate of Secondary Education (IGCSE), and A-Level frameworks. Dalton’s work is centered on designing authentic learning experiences that embed generative AI tools. Leveraging his deep background in Computer Science and Design, he equips students and fellow educators with the skills to use generative AI tools to deepen inquiry and enhance creativity. As a key voice shaping the conversation around generative AI, he has authored and implemented comprehensive generative AI guidance documents for international schools, establishing frameworks for their strategic and ethical use. He is also the Co-Founder of Alignment Education.

LinkedIn: https://www.linkedin.com/in/dalton-flanagan/